Advanced PowerShell vol. 1: reusability of code

Hello! As a big fan and active practitioner of PowerShell I'm often faced with the fact that I need to re-use previously written pieces of code.

In fact, modern programming languages, code reuse is a common thing.

PowerShell in this issue not far behind, and offers developers (napisalem scripts) from multiple referral mechanisms to the previously written code.

Here they are in ascending complexity: the use of functions, dot-sourcing and writing your own modules.

Consider them all in order.

As a solution of laboratory tasks write a script that extends the C:\ to the maximum possible size on a remote Windows server LAB-FS1.

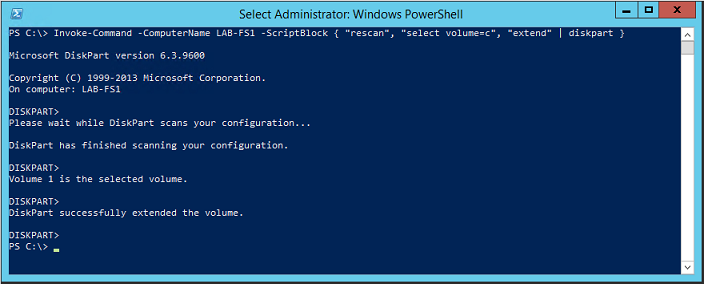

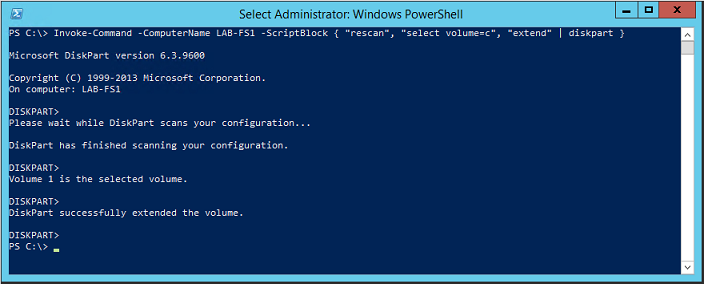

Such a script would be one line and look like this:

the

It works like this. First PowerShell establishes a remote connection to server LAB-FS1 and runs it locally a set of commands in curly brackets option -ScriptBlock. This set, in turn, consecutively transmits the command diskpart three text parameter and diskpart executes (turns) rescan the partitions, select the partition C:\ and expanding it to the maximum possible size.

As you can see, the script is extremely simple but at the same time extremely useful.

Consider how to package it for reuse.

the

The most simple variant.

Suppose we write a great script in which we, for various reasons, it is necessary many times to run the extension sections on different servers. It is more logical to select all the script in a separate function in the same .ps1-file and simply call it as needed. In addition, we will expand the functionality of the script, allowing the administrator to explicitly specify the remote server name and the letter of the expandable section. The name and the letter will pass through settings.

Function and its call would look like this:

the

Here for ExtendDisk-Remotely set two parameters:

the

It can also be noted that the transfer of a local variable in a remote session is done using the keyword using.

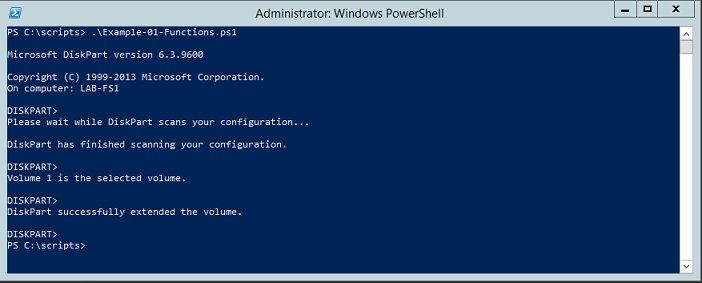

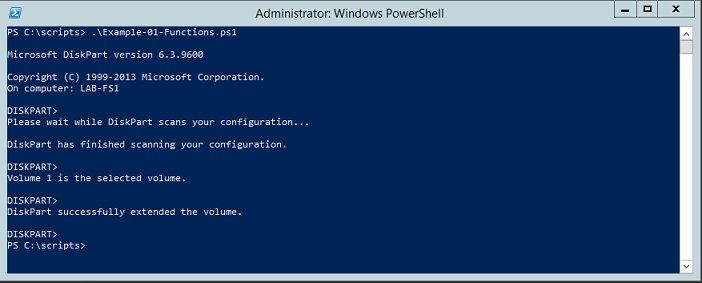

Save the script under the name Example-01-Functions.ps1 and run:

We see that our function was successfully invoked and expanded the C:\ on server LAB-FS1.

the

Complicate the situation. Our function is to expand the sections was so good that we want to resort to using it in other scripts. How to be?

Copy the function text from the source .the. ps1 file and paste it in the everything you need? But if the function code is regularly updated? But if I use this function hundreds of scripts? Obviously, it is necessary to make it in a separate file and connect as required.

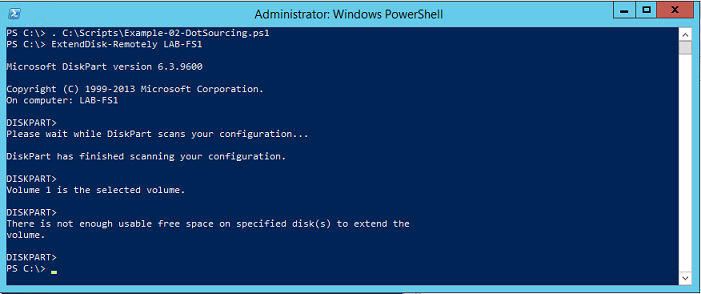

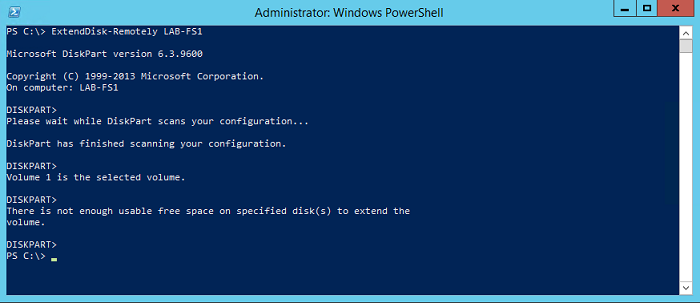

Will create a separate file for all our functions and call it Example-02-DotSourcing.ps1.

Its contents will be:

the

This function Declaration (without challenge) that we have now stored in a separate file and can be called at any time by using a technique called dot sourcing. The syntax looks like this:

the

Article based on information from habrahabr.ru

In fact, modern programming languages, code reuse is a common thing.

PowerShell in this issue not far behind, and offers developers (napisalem scripts) from multiple referral mechanisms to the previously written code.

Here they are in ascending complexity: the use of functions, dot-sourcing and writing your own modules.

Consider them all in order.

As a solution of laboratory tasks write a script that extends the C:\ to the maximum possible size on a remote Windows server LAB-FS1.

Such a script would be one line and look like this:

the

Invoke-Command -ComputerName LAB-FS1 -ScriptBlock { "rescan", "select volume=c", "extend" | diskpart }It works like this. First PowerShell establishes a remote connection to server LAB-FS1 and runs it locally a set of commands in curly brackets option -ScriptBlock. This set, in turn, consecutively transmits the command diskpart three text parameter and diskpart executes (turns) rescan the partitions, select the partition C:\ and expanding it to the maximum possible size.

As you can see, the script is extremely simple but at the same time extremely useful.

Consider how to package it for reuse.

the

1. The use of functions

The most simple variant.

Suppose we write a great script in which we, for various reasons, it is necessary many times to run the extension sections on different servers. It is more logical to select all the script in a separate function in the same .ps1-file and simply call it as needed. In addition, we will expand the functionality of the script, allowing the administrator to explicitly specify the remote server name and the letter of the expandable section. The name and the letter will pass through settings.

Function and its call would look like this:

the

function ExtendDisk-Remotely

{

param (

[Parameter (Mandatory = $true)]

[string] $ComputerName,

[Parameter (Mandatory = $false)]

[string] $DiskDrive = "c"

)

Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart}

}

ExtendDisk-Remotely -ComputerName LAB-FS1

Here for ExtendDisk-Remotely set two parameters:

the

-

the

- the Mandatory ComputerName; the

- an Optional DiskDrive. Is not ask the name of the drive explicitly, the script will work with your drive C:\

It can also be noted that the transfer of a local variable in a remote session is done using the keyword using.

Save the script under the name Example-01-Functions.ps1 and run:

We see that our function was successfully invoked and expanded the C:\ on server LAB-FS1.

the

2. Dot-sourcing

Complicate the situation. Our function is to expand the sections was so good that we want to resort to using it in other scripts. How to be?

Copy the function text from the source .the. ps1 file and paste it in the everything you need? But if the function code is regularly updated? But if I use this function hundreds of scripts? Obviously, it is necessary to make it in a separate file and connect as required.

Will create a separate file for all our functions and call it Example-02-DotSourcing.ps1.

Its contents will be:

the

function ExtendDisk-Remotely

{

param (

[Parameter (Mandatory = $true)]

[string] $ComputerName,

[Parameter (Mandatory = $false)]

[string] $DiskDrive = "c"

)

Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart}

}

This function Declaration (without challenge) that we have now stored in a separate file and can be called at any time by using a technique called dot sourcing. The syntax looks like this:

the

. C:\Scripts\Example-02-DotSourcing.ps1

ExtendDisk-Remotely LAB-FS1

Look closely at the first line of code and analyze its contents: point, gap, the path to the file with the function description.

This syntax allows us to connect to the current script contents of the file Example-02-DotSourcing.ps1. This is the same as to use the Directive #include in C++ or using in C# — connecting the pieces of code from external sources.

After connecting the external file we have in the second line you can call its member functions, which we successfully do. While SeaDataNet external file, you can not only in the body of the script, but in the "naked" PowerShell console:

Technique dataring can be used, and it will work for you, but it's much more convenient to use a more modern method, which we will discuss in the next section.

the 3. Writing your own PowerShell module

Note: I use the PowerShell version 4.

One of its features is that it automatically loads into memory modules when accessed, without the use Import Module.

In older versions of the PowerShell (starting with 2) is written below will work, but may require additional manipulation associated with pre-import the modules before using them.

We will consider the contemporary environment.

Complicating the situation again.

We have become a very good PowerShell-programmers have written hundreds of useful features for ease of use divided them into tens .ps1 files, and in the required scripts dotarem the files you need. But if you have dozens of files to doctoring and must specify them in the hundreds of scripts? But if we have several of them renamed? Obviously, that will have to change the path for all files in all scripts — it's very uncomfortable.

So.

No matter how many functions for reuse you wrote, even one, just make it in a separate module. Writing your own modules is the simplest, best and competent method to reuse code in PowerShell.

the What is the Windows PowerShell module

Module Windows PowerShell is a set of functionality, which in one form or another are placed in separate operating system files. For example, all native Maykrosoftovskie modules are binary and represent the compiled .dll. We will write a module script — copy the code from the file Example-02-DotSourcing.ps1 and save it as a file with the extension .psm1.

To understand where to save, view the contents of the environment variable PSModulePath.

You can see that by default we have three folders in which the PowerShell will look for modules.

The value of the variable PSModulePath can be edited using the group policy by setting paths to the modules for the entire network, but that's another story, and to consider it now, we will not. But we will work with your C:\Users\Administrator\Documents\WindowsPowerShell\Modules and save the module in it.

Code remains the same:

the function ExtendDisk-Remotely

{

param (

[Parameter (Mandatory = $true)]

[string] $ComputerName,

[Parameter (Mandatory = $false)]

[string] $DiskDrive = "c"

)

Invoke-Command -ComputerName $ComputerName -ScriptBlock {"rescan", "select volume=$using:DiskDrive", "extend" | diskpart}

}

The only change is the folder in which you saved the file and its extension.

Very important!

Inside the folder Modules to create the subfolder with the name of our module. Let that name be RemoteDiskManagement. Save the file inside this subfolder and give it the same the name and extension .psm1 — get file C:\Users\Administrator\Documents\WindowsPowerShell\Modules\RemoteDiskManagement\RemoteDiskManagement.psm1.

Our module is ready and we can check that it is visible in the system:

The module is visible, and in the future we can call its functions without prior notice of in the body of the script or doctoring.

That's it: we can write tens of own modules, edit the code included in their functions, and use them at any time, no matter what script you need to change the function name or the path to doctorname file.

the 4. Other advanced capabilities

As I wrote, I love PowerShell, and if the community is interested, I can write a dozen articles about its extended functionality. Here are examples of discussion topics: adding references to written functions and modules; how to make your function accept values from the pipeline, and what characteristics it imposes on the writing of the script; what is the pipeline and what it eats; how to use PowerShell to work with databases; how advanced functions and what properties they may have their settings etc.

Interesting?

Then try to issue one article a week.

Комментарии

Отправить комментарий