States for dividing in PostgreSQL – What? Why? How?

Function particioniranja tables in PostgreSQL, unfortunately, is not yet actively used by many. In my opinion, very worthy talks about her in his work, Hubert Lubaczewski (depesz.com). I offer you another translation of his article!

Lately I've noticed that more and more often faced with cases where it would be possible to use States for dividing. Although, theoretically, most people know about its existence, actually this feature not too well understand, and some even feared.

So I will try to explain to the best of their knowledge and ability, what it is, why it should be used and how to do it.

As you probably know, PostgreSQL has tables and the tables have data. Sometimes it's just a few lines, and sometimes billions.

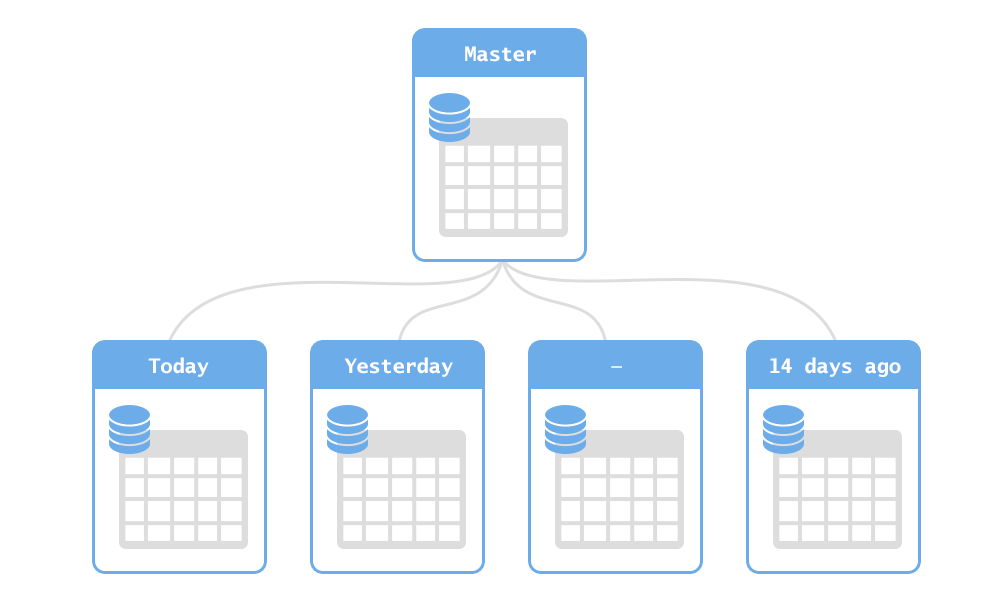

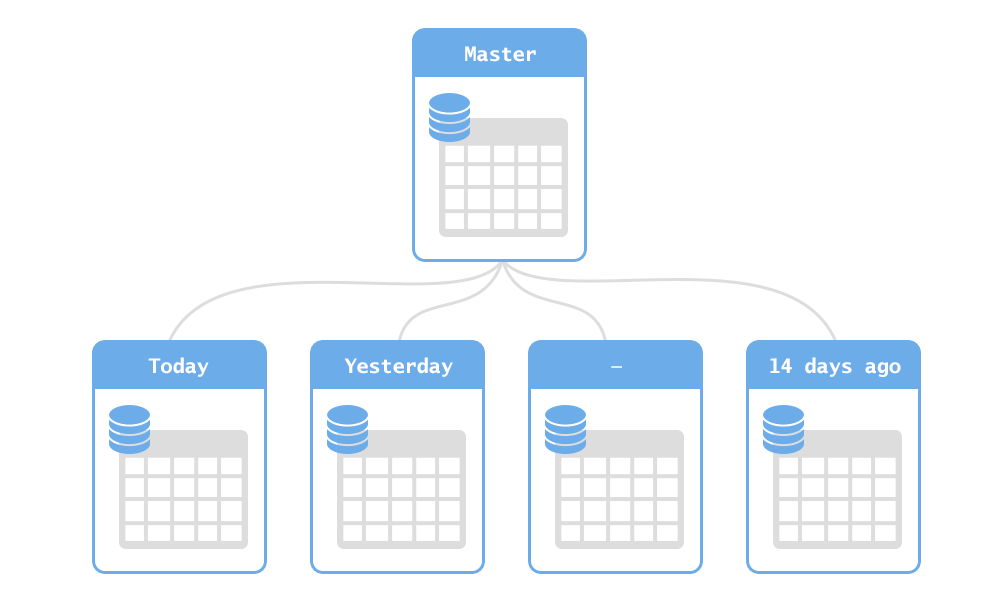

States for dividing is the practice of dividing large (based on number of records rather than columns) table into many small ones. And it is desirable that it is transparent to the application.

One of the rarely used features of PostgreSQL is the fact that it is object-relational database. And "object" being the key word here, because the objects (or rather classes) know what is called "inheritance". It is used to particioniranja.

Let's see what it's about.

I will create a normal table users:

the

Now, for the sake of completeness, let's add a few lines of additional code:

the

So, we've got a test table:

the

With some random data:

the

Now, when the table is ready, I can create the partition, which means the inherited table:

the

Thus, we got a new table, which has some interesting properties:

the

We now have all the indexes and constraints, but we lost the information about inheritance. But we can add it later using:

the

We could do this in one step, but then there are various unpleasant notification:

the

In any case, we now have two tables – the main and the first partition.

If I produce some kind of action – sampling/update/delete with users, both tables are scanned:

the

But if I go to the partition directly, the query will only be executed on it:

the

If we wanted, we could only refer to the users table without partitions, using the key word ONLY:

the

You may have noticed that I said that fetching/updating/deleting works on all partitions. What about the inserts? You insert need to add somewhere data, so that it always runs as if it had been used ONLY. So if I need to add a line to users_1, I have to do this:

the

Doesn't look too good, but don't worry, there are ways to get around that.

Let's try to make States for dividing. First we need to decide what will be the key particioniranja – in other words, which algorithm will be chosen partition.

There are a couple of the most obvious:

the

There are a couple of other, less used options, like "particioniranja hash from user name".

Why you should use one scheme rather than another? Let us look at their advantages and disadvantages:

the

The latter approach, a hashed user names is very interesting. Let's see what happens there.

For starters, I need to create more partitions:

the

Now the users table has 10 partitions:

the

In PostgreSQL, you have the option constraint_exclusion. And if it is set to "on" or "partition", PostgreSQL will skip the partition that cannot contain matching lines.

My Pg is set by default:

the

So, since all my partitions and base of the table there is no sensible limit, so any query will scan from all 11 tables (main and 10 partitions):

the

It's not too effective, but we can put the restriction.

For example, our partition was formed by the method of particioniranja by id, and each partition is stored 100,000 identifiers.

We can add several constraints:

the

Now repeat the previous query:

the

It only scans 2 tables: the main table (which now contains all of the details, and no restrictions, so it can't be deleted) and a suitable partition.

Great, right?

We can add similar conditions for particioniranja username or created_on. But look what happens when key particioniranja more complex:

the

In case you are not aware, hashtext() takes a string and returns an integer in the range -2147483648 to 2147483647.

With simple arithmetic we know that abs(hashtext(string)) % 10 will always return a value in the range 0..9, and it is easy to calculate for any parameter.

Does this PostgreSQL?

the

No. Don't know. In fact, PostgreSQL can do the automatic exclusion of files only to inspections based on the range (or the equality). Anything based on features. Even the simple module number – this is overkill:

the

It's sad. Because the keys particioniranja based on the modules of the numbers, there's one huge (in my opinion) advantage is stable, the number of partitions. You don't have to create them in the future, unless you decide to produce States for dividing again upon reaching a higher volume of data.

Does this mean that you can't use a complex (based on the functions or modules from the numbers) keys particioniranja? No. You can use them, but then the queries get more complicated:

the

Here I added one more condition, that is:

the

PostgreSQL maybe in the process of parsing the expression to rewrite it:

the

because he knows that the % operator for integers is is immutable. And now, as part of the query, I have the exact key particioniranja – id % 10 = 3. Thus, Pg can only use the partition that either have no key particioniranja (i.e. base table), or have a key corresponding to the request.

Is it worth to introduce additional complexity – you decide.

If you prefer not to change the queries, and you'll be able to add new partition from time to time, you can check out PG Partition Manger, written by my former colleague Keith Fiske is a set of functions that you run manually to determine the partitions, and another one that you start at the crown, and it takes care of creating new partitions for future data.

I have already mentioned paste, but did not explain how to bypass the problem with the inserts that need to be added to the partitions.

Overall, this is a job for a trigger. Pg_partman from China generates these triggers for you, but I want you to understand what is happening, and not used pg_partman as a "black box" but rather as an auxiliary tool that does the tedious work for you.

Now my circuit particioniranja based on the module of the number (as far as I know, partman so can not do), so let's write function of the trigger. It will be called when you insert data into users table and need to redirect inserts to the appropriate partition. So, you write:

the

And now the definition of the trigger:

the

Try to add the line:

the

View, visible data:

the

Looks good, but where are they? In the main table?

the

No. So maybe, in the right partition?

the

Yes. The trigger worked. But this method has one drawback. Namely, the “RETURNING" does not work:

the

This is because, from the point of view of the contractor, insert nothing back – a trigger returned NULL.

I have not yet managed to find a solution to this problem. In their cases I just prefer to get the initial value of the key in advance, using the nextval () and then insert the finished value, so it is already there after insert:

the

To all this there is one clarification. Routing every insertion through the trigger slows them down, because for each line of PG will need to perform another “insert".

For urgent bulk inserts best solution is to get them to work directly with the partitions. So, for example, instead of

the

you first find out how many IDs you have, for example, in this way:

the

And then issue the appropriate

the

Not the most comfortable way, but it can be useful if you are importing large amounts of data in particioniranje table.

So now you need to understand what States for dividing and how it works. The next question in the header was: why?

Answer it is relatively easy: for performance or ease of maintenance.

As a simple example, let's take the users table, in which rows of 1 billion (1,000,000,000).

Search in it would be progressive to go up even with the index, simply because the depth of the index will increase.

Let's drop all the partition and trigger particioniranja:

the

Now in the users table of 10,000 rows. A simple search on a user name takes 0.020 MS is the best time from three attempts.

If I add more rows:

the

the same search is 0.025 MS. The increase in search time 0.005 MS may be small, but we were still only 110,000 rows, and no other tables, so that the whole table with indexes fits in memory.

Of course, your States for dividing is to be meaningful. For example, if you usually search by user name, it is meaningless to do States for dividing id – Pg will have to search on all partitions (this may become meaningful in the future, but I will tell you that at the end of the article).

That is, you need to decide what you normally request, whether the search for some kind of key or perhaps you are usually looked at only recent data? And partitionierung thus, to limit the number of partitions that Pg need to scan.

It is important that States for dividing makes your life easier, especially if you're more of a DBA than a programmer. Any problem on maintenance (create index, vacuum, pg_reorg/pg_repack, pg_dump) can be efficiently divided into as many subtasks as you have partitions. So instead of one transaction hours to Repack a large table you will have 20 much faster and uses less disk space transaction, and the result will be the same!

Of course, the good news is not limited. In the States for dividing has one big disadvantage: you can't have foreign keys pointing to partitionierung table.

It just doesn't work. You could have foreign keys pointing straight to the partition, but it (usually) is meaningless.

Is this a big problem for you personally depends on your uscaca. It seems to me that in most cases, when we reach the tables large enough order to States for dividing was justified, the application is tested well enough, and we can deal with the lack of foreign key. Besides, we can always add a task in cron to test the presence of "bad" values.

Now we know what States for dividing, how it works and why used. The only question left is: how to convert a table in partitionierung. Usually, the app is not created with partitionierungen tables – in the beginning it does not make sense. But, soon, you will have some table with many rows and you think: "I had to partitionierung it immediately when you create".

But maybe we can still partitionierung when the application is already running? With a minimum of problems?

Let's see. For test I created the database pgbench 97 GB. Most of it, 83 GB, is in the pgbench_accounts table, which contains 666,600,000 records.

Scheme from this table like this:

the

And all requests to it are based on the aid column, which contains values from 1 to 666,600,000.

So let's partitionieren her based on the range of aid values.

Let's say I put in each partition and 10 million rows, then I will need 67 partitions.

But how can I check that my actions will not break? Very simple. I'll run pgbench in a loop. I'm not interested in accurate reporting speeds, enough information about how much my job affects what pgbench does.

With these thoughts I ran the function:

the

It will run a 10 second test and save the statistics to a file so I can later trace the relationship of the result of my work in the States for dividing.

When everything is ready, I will create the partition with checks in the right places:

the

the partition is ready, and I can verify that the checks used:

the

Now you need to add trigger-”router”:

the

That's nice, but it would only work for svezhesrublennyj lines, and I had 666 million rows in the source table. What to do with it?

I need to move them. It's relatively simple in theory, but there are a couple of pitfalls:

The second problem can be mitigated if working with batches of data. But we can't use SQL.

From time to time someone asks about how to split a large transaction into portions and call it with one sql function that will iteratively process the data portion. This approach has one fundamental problem: the function call is the transaction. So all this function does is going to happen in one transaction. So the blocking issue is not solved.

But we can use psql (or ruby, perl, python), moving with each serving only a small number of rows, and thus interlocking the main table for a short time.

In General, a single query will look like this:

the

I chose the serving size, 1000, is a sufficiently small value, so that the process is not delayed, and large enough for the number of servings was not excessive (666 thousand).

Now let's create the batch file:

the

When I run it in psql, it created the file /tmp/run.batch.migration.sql, which is quite voluminous (97 GB) because it contains 666,600 requests like these:

the

Now, when everything is ready, I can start the process (of course, using "screen" or "tmux", so that nothing is lost, if the ssh connection to the server will break):

the

It will take some time. In the case of my test database, the average package is handled for ~ 92мс, and so I have 17 hours of moving data around.

In reality, it only took 7 hours. Well.

At the end of the table pgbench_accounts still weighs ~ 83Гб (I think my disk is not fast enough to cope with pgbench, displacement and vacuum).

But I checked and it seems that all rows are moved to the partition:

the

How about the speed of pgbench during the move process?

There were 4 phases:

Results?

the

Yes, all movement slowed. But note, please, that this is a common personal computer with SATA drives, not SSD, is under constant high load – pgbench ran your queries as quickly as I could.

In addition, the slight slowdown was due to the fact that vacuum not too good with the deletions. In my opinion, the result is absolutely acceptable.

At the end I could do:

the

And then to check if everything is OK:

the

All this was done without any errors and without interruptions of the "real applications".

In the end I will add that the States for dividing soon (relatively) it gets steeper. Recently we can store partition on different servers. And now work is underway (although it is unlikely this update will appear sooner 9.6) to give the opportunity to conduct a parallel scan that will significantly improve the whole process.

I hope this text will be useful for you.

What other aspects of particioniranja tables in PostgreSQL, would you like to discuss? We will be glad to add the program of the conference PG Day'16 Russia the most interesting topics for you! We have opened sales early bird tickets, hurry to register at the lowest price!

Article based on information from habrahabr.ru

Lately I've noticed that more and more often faced with cases where it would be possible to use States for dividing. Although, theoretically, most people know about its existence, actually this feature not too well understand, and some even feared.

So I will try to explain to the best of their knowledge and ability, what it is, why it should be used and how to do it.

As you probably know, PostgreSQL has tables and the tables have data. Sometimes it's just a few lines, and sometimes billions.

States for dividing is the practice of dividing large (based on number of records rather than columns) table into many small ones. And it is desirable that it is transparent to the application.

One of the rarely used features of PostgreSQL is the fact that it is object-relational database. And "object" being the key word here, because the objects (or rather classes) know what is called "inheritance". It is used to particioniranja.

Let's see what it's about.

I will create a normal table users:

the

$ create table users (

id serial primary key,

username text not null unique,

password text,

created_on timestamptz not null,

last_logged_on timestamptz not null

);

Now, for the sake of completeness, let's add a few lines of additional code:

the

$ insert into users (username, password, created_on, last_logged_on)

select

random_string( (random() * 4 + 5)::int4),

random_string( 20 ),

now() - '2 years'::interval * random(),

now() - '2 years'::interval * random()

from

generate_series(1, 10000);

$ newest_users create index on users (created_on);

So, we've got a test table:

the

$ \d

Table "public.users"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Indexes:

"users_pkey" PRIMARY KEY, btree (id)

"users_username_key" UNIQUE CONSTRAINT, btree (username)

"newest_users" btree (created_on)

With some random data:

the

$ select * from users limit 10;

id | username | password | created_on | last_logged_on

----+----------+----------------------+-------------------------------+-------------------------------

1 | ityfce3 | 2ukgbflj_l2ndo3vilt2| 2015-01-02 16:56:41.346113+01 | 2015-04-15 12:34:58.318913+02

2 | _xg_pv | u8hy20aifyblg9f3_rf2| 2014-09-27 05:41:05.317313+02 | 2014-08-07 14:46:14.197313+02

3 | uvi1wo | h09ae85v_f_cx0gf6_8r| 2013-06-17 18:48:44.389313+02 | 2014-06-03 06:53:49.640513+02

4 | o6rgs | vzbrkwhnsucxco5pjep0| 2015-01-30 11:33:25.150913+01 | 2013-11-05 07:18:47.730113+01

5 | nk61jw77 | lidk_mnpe_olffmod7ed| 2014-06-15 07:18:34.597313+02 | 2014-03-21 17:42:44.763713+01

6 | 3w326_2u | pyoqg87feemojhql7jrn| 2015-01-20 05:41:54.133313+01 | 2014-09-07 20:33:23.682113+02

7 | m9rk9mnx | 6pvt94s6ol46kn0yl62b| 2013-07-17 15:13:36.315713+02 | 2013-11-12 10:53:06.123713+01

8 | adk6c | egfp8re0z492e6ri8urz| 2014-07-23 11:41:11.883713+02 | 2013-10-22 07:19:36.200513+02

9 | rsyaedw | ond0tie9er92oqhmdj39| 2015-05-11 16:45:40.472513+02 | 2013-08-31 17:29:18.910913+02

10 | prlobe46 | _3br5v97t2xngcd7xz4n| 2015-01-10 20:13:29.461313+01 | 2014-05-04 06:25:56.072513+02

(10 rows)

Now, when the table is ready, I can create the partition, which means the inherited table:

the

$ create table users_1 () inherits (users);

$ \d users_1

Table "public.users_1"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Inherits: users

Thus, we got a new table, which has some interesting properties:

the

-

the

- it uses the same sequence as the base table for its column id; the

- all columns have the same definition, including not null constraints; the

- has neither a primary key nor unique constraints for the user name, no index for created_on. Let's try this again, but this time with a more “explosive” effect:

the

$ drop table users_1;

$ create table users_1 ( like users including all );

$ \d users_1

Table "public.users_1"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Indexes:

"users_1_pkey" PRIMARY KEY, btree (id)

"users_1_username_key" UNIQUE CONSTRAINT, btree (username)

"users_1_created_on_idx" btree (created_on)

We now have all the indexes and constraints, but we lost the information about inheritance. But we can add it later using:

the

$ alter table users users_1 inherit;

$ \d users_1

Table "public.users_1"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Indexes:

"users_1_pkey" PRIMARY KEY, btree (id)

"users_1_username_key" UNIQUE CONSTRAINT, btree (username)

"users_1_created_on_idx" btree (created_on)

Inherits: users

We could do this in one step, but then there are various unpleasant notification:

the

$ drop table users_1;

$ create table users_1 ( like users including all ) inherits (users);

NOTICE: merging column "id" with inherited definition

NOTICE: merging column "username" with inherited definition

NOTICE: merging column "password" with inherited definition

NOTICE: merging column "created_on" with inherited definition

NOTICE: merging column "last_logged_on" with inherited definition

$ \d users_1

Table "public.users_1"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Indexes:

"users_1_pkey" PRIMARY KEY, btree (id)

"users_1_username_key" UNIQUE CONSTRAINT, btree (username)

"users_1_created_on_idx" btree (created_on)

Inherits: users

In any case, we now have two tables – the main and the first partition.

If I produce some kind of action – sampling/update/delete with users, both tables are scanned:

the

$ explain analyze select * from users where id = 123;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------

Append (cost=0.29..16.47 rows=2 width=66) (actual time=0.008..0.009 rows=1 loops=1)

-> Index Scan using users_pkey on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.008..0.008 rows=1 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_1_pkey on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

Planning time: 0.327 ms

Execution time: 0.031 ms

(7 rows)

But if I go to the partition directly, the query will only be executed on it:

the

$ explain analyze select * from users_1 where id = 123;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------

Index Scan using users_1_pkey on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.002..0.002 rows=0 loops=1)

Index Cond: (id = 123)

Planning time: 0.162 ms

Execution time: 0.022 ms

(4 rows)

If we wanted, we could only refer to the users table without partitions, using the key word ONLY:

the

$ explain analyze select * from only users where id = 123;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------

Index Scan using users_pkey on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.008..0.008 rows=1 loops=1)

Index Cond: (id = 123)

Planning time: 0.229 ms

Execution time: 0.031 ms

(4 rows)

You may have noticed that I said that fetching/updating/deleting works on all partitions. What about the inserts? You insert need to add somewhere data, so that it always runs as if it had been used ONLY. So if I need to add a line to users_1, I have to do this:

the

INSERT INTO users_1 ...

Doesn't look too good, but don't worry, there are ways to get around that.

Let's try to make States for dividing. First we need to decide what will be the key particioniranja – in other words, which algorithm will be chosen partition.

There are a couple of the most obvious:

the

-

the

- States for dividing by the date – for example, choose the partition based on the year in which the account has been created; the

- States for dividing the range of IDs – for example, the first million users, second million users, and so on; the

- States for dividing by anything else – for example, the first letter of the user name.

There are a couple of other, less used options, like "particioniranja hash from user name".

Why you should use one scheme rather than another? Let us look at their advantages and disadvantages:

the

-

the

- States for dividing by date:

the-

the

- advantages:

the-

the

- easy to understand; the

- the number of rows in this table will be fairly stable;

the - disadvantages:

the-

the

- requires support from time to time we have to add the new partition; the

- search by user name or id will require scanning all partitions;

the - advantages:

- States for dividing by id:

the-

the

- advantages:

the-

the

- easy to understand; the

- the number of rows in the partition will be 100% stable;

the - disadvantages:

the-

the

- requires support from time to time we have to add the new partition; the

- search by user name or id will require scanning all partitions;

the - advantages:

- States for dividing the first letter of the user name:

the-

the

- advantages:

the-

the

- easy to understand; the

- no support – there is a specific set of partitions and we never have to add a new one;

the - disadvantages:

the-

the

- the number of rows in the partitions will grow steadily; the

- in some partitions will be significantly more rows than others (more people with nicknames starting with “t*" than the “y*"); the

- search by id would require scanning all partitions;

the - advantages:

- States for dividing hash user name:

the-

the

- advantages:

the-

the

- no support – there is a specific set of partitions and we never have to add a new one; the

- lines will still be shared between partitions;

the - disadvantages:

the-

the

- the number of rows in the partitions will grow steadily; the

- search by id would require scanning all partitions; the

- search by user name will scan only one partition, but only when using the optional conditions.

- advantages:

The latter approach, a hashed user names is very interesting. Let's see what happens there.

For starters, I need to create more partitions:

the

$ create table users_2 ( like users including all );

$ alter table users users_2 inherit;

...

$ create table users_10 ( like users including all );

$ alter table users users_10 inherit;

Now the users table has 10 partitions:

the

$ \d users

Table "public.users"

Column | Type | Modifiers

----------------+--------------------------+----------------------------------------------------

id | integer | not null default nextval('users_id_seq'::regclass)

username | text | not null

password | text |

created_on | timestamp with time zone | not null

last_logged_on | timestamp with time zone | not null

Indexes:

"users_pkey" PRIMARY KEY, btree (id)

"users_username_key" UNIQUE CONSTRAINT, btree (username)

"newest_users" btree (created_on)

Number of child tables: 10 (Use \d+ to list them.)

In PostgreSQL, you have the option constraint_exclusion. And if it is set to "on" or "partition", PostgreSQL will skip the partition that cannot contain matching lines.

My Pg is set by default:

the

$ show constraint_exclusion;

constraint_exclusion

----------------------

partition

(1 row)

So, since all my partitions and base of the table there is no sensible limit, so any query will scan from all 11 tables (main and 10 partitions):

the

$ explain analyze select * from users where id = 123;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------

-> Index Scan using users_pkey on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.007..0.007 rows=1 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_1_pkey on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_2_pkey on users_2 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_3_pkey on users_3 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_4_pkey on users_4 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_5_pkey on users_5 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_6_pkey on users_6 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_7_pkey on users_7 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_8_pkey on users_8 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_9_pkey on users_9 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_10_pkey on users_10 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

Planning time: 1.321 as next ms

Execution time: 0.087 ms

(25 rows)

It's not too effective, but we can put the restriction.

For example, our partition was formed by the method of particioniranja by id, and each partition is stored 100,000 identifiers.

We can add several constraints:

the

$ users_1 alter table add constraint partition_check check (id >= 0 and id < 100000);

$ alter table users_2 partition_check add constraint check (id >= 100000 and id < 200000);

...

$ alter table add constraint users_10 partition_check check (id > = 900000 and id < 1000000);

Now repeat the previous query:

the

$ explain analyze select * from users where id = 123;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------

Append (cost=0.29..16.47 rows=2 width=66) (actual time=0.008..0.009 rows=1 loops=1)

-> Index Scan using users_pkey on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.008..0.009 rows=1 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_1_pkey on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

Planning time: 1.104 ms

Execution time: 0.031 ms

(7 rows)

It only scans 2 tables: the main table (which now contains all of the details, and no restrictions, so it can't be deleted) and a suitable partition.

Great, right?

We can add similar conditions for particioniranja username or created_on. But look what happens when key particioniranja more complex:

the

$ alter table users_1 partition_check drop constraint, add constraint partition_check check (abs( hashtext(username) ) % 10 = 0);

$ alter table users_2 partition_check drop constraint, add constraint partition_check check (abs( hashtext(username) ) % 10 = 1);

...

$ alter table users_10 partition_check drop constraint, add constraint partition_check check (abs( hashtext(username) ) % 10 = 9);

In case you are not aware, hashtext() takes a string and returns an integer in the range -2147483648 to 2147483647.

With simple arithmetic we know that abs(hashtext(string)) % 10 will always return a value in the range 0..9, and it is easy to calculate for any parameter.

Does this PostgreSQL?

the

$ explain analyze select * from users where username = 'depesz';

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.29..89.98 rows=11 width=81) (actual time=0.023..0.023 rows=0 loops=1)

-> Index Scan using users_username_key on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.016..0.016 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_1_username_key on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_2_username_key on users_2 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_3_username_key on users_3 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_4_username_key on users_4 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_5_username_key on users_5 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_6_username_key on users_6 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_7_username_key on users_7 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

-> Index Scan using users_8_username_key on users_8 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_9_username_key on users_9 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

-> Index Scan using users_10_username_key on users_10 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (username = 'depesz'::text)

Planning time: 1.092 ms

Execution time: 0.095 ms

(25 rows)

No. Don't know. In fact, PostgreSQL can do the automatic exclusion of files only to inspections based on the range (or the equality). Anything based on features. Even the simple module number – this is overkill:

the

$ users_1 alter table drop constraint partition_check, partition_check add constraint check ( id % 10 = 0);

$ alter table users_2 partition_check drop constraint, add constraint partition_check check ( id % 10 = 1);

...

$ alter table drop constraint users_10 partition_check, partition_check add constraint check ( id % 10 = 9);

$ explain analyze select * from users where id = 123;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.29..89.98 rows=11 width=81) (actual time=0.009..0.016 rows=1 loops=1)

-> Index Scan using users_pkey on users (cost=0.29..8.30 rows=1 width=48) (actual time=0.009..0.009 rows=1 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_1_pkey on users_1 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_2_pkey on users_2 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_3_pkey on users_3 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_4_pkey on users_4 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_5_pkey on users_5 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_6_pkey on users_6 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_7_pkey on users_7 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_8_pkey on users_8 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_9_pkey on users_9 (cost=0.15..8.17 rows=1 width=84) (actual time=0.000..0.000 rows=0 loops=1)

Index Cond: (id = 123)

-> Index Scan using users_10_pkey on users_10 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

Planning time: 0.973 ms

Execution time: 0.086 ms

(25 rows)

It's sad. Because the keys particioniranja based on the modules of the numbers, there's one huge (in my opinion) advantage is stable, the number of partitions. You don't have to create them in the future, unless you decide to produce States for dividing again upon reaching a higher volume of data.

Does this mean that you can't use a complex (based on the functions or modules from the numbers) keys particioniranja? No. You can use them, but then the queries get more complicated:

the

$ explain analyze select * from users where id = 123 and id % 10 = 123 % 10;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------

Append (cost=0.29..16.48 rows=2 width=66) (actual time=0.010..0.011 rows=1 loops=1)

-> Index Scan using users_pkey on users (cost=0.29..8.31 rows=1 width=48) (actual time=0.010..0.010 rows=1 loops=1)

Index Cond: (id = 123)

Filter: ((id % 10) = 3)

-> Index Scan using users_4_pkey on users_4 (cost=0.15..8.17 rows=1 width=84) (actual time=0.001..0.001 rows=0 loops=1)

Index Cond: (id = 123)

Filter: ((id % 10) = 3)

Planning time: 1.018 ms

Execution time: 0.033 ms

(9 rows)

Here I added one more condition, that is:

the

id % 10 = 123 % 10

PostgreSQL maybe in the process of parsing the expression to rewrite it:

the

id % 10 = 3

because he knows that the % operator for integers is is immutable. And now, as part of the query, I have the exact key particioniranja – id % 10 = 3. Thus, Pg can only use the partition that either have no key particioniranja (i.e. base table), or have a key corresponding to the request.

Is it worth to introduce additional complexity – you decide.

If you prefer not to change the queries, and you'll be able to add new partition from time to time, you can check out PG Partition Manger, written by my former colleague Keith Fiske is a set of functions that you run manually to determine the partitions, and another one that you start at the crown, and it takes care of creating new partitions for future data.

I have already mentioned paste, but did not explain how to bypass the problem with the inserts that need to be added to the partitions.

Overall, this is a job for a trigger. Pg_partman from China generates these triggers for you, but I want you to understand what is happening, and not used pg_partman as a "black box" but rather as an auxiliary tool that does the tedious work for you.

Now my circuit particioniranja based on the module of the number (as far as I know, partman so can not do), so let's write function of the trigger. It will be called when you insert data into users table and need to redirect inserts to the appropriate partition. So, you write:

the

$ create function partition_for_users() returns trigger as $$

DECLARE

v_parition_name text;

BEGIN

v_parition_name := format( 'users_%s', 1 + NEW.id % 10 );

execute 'INSERT INTO' || v_parition_name || ' VALUES ( ($1).* )' USING NEW;

return NULL;

END;

$$ language plpgsql;

And now the definition of the trigger:

the

$ partition_users create trigger before insert on users for each row execute procedure partition_for_users();

Try to add the line:

the

$ insert into users (username, password, created_on, last_logged_on)

values (

'depesz',

random_string( 20 ),

now() - '2 years'::interval * random(),

now() - '2 years'::interval * random()

);

$ select currval('users_id_seq');

currval

---------

10003

(1 row)

View, visible data:

the

$ select * from users where username = 'depesz';

id | username | password | created_on | last_logged_on

-------+----------+----------------------+-------------------------------+-------------------------------

10003 | depesz | bp7zwy8k3t3a37chf1hf| 2014-10-24 02:45:51.398824+02 | 2015-02-05 18:24:57.072424+01

(1 row)

Looks good, but where are they? In the main table?

the

$ select * from only users where username = 'depesz';

id | username | password | created_on | last_logged_on

----+----------+----------+------------+----------------

(0 rows)

No. So maybe, in the right partition?

the

$ select * from users_4 where username = 'depesz';

id | username | password | created_on | last_logged_on

-------+----------+----------------------+-------------------------------+-------------------------------

10003 | depesz | bp7zwy8k3t3a37chf1hf| 2014-10-24 02:45:51.398824+02 | 2015-02-05 18:24:57.072424+01

Yes. The trigger worked. But this method has one drawback. Namely, the “RETURNING" does not work:

the

$ insert into users (username, password, created_on, last_logged_on)

values (

'test',

random_string( 20 ),

now() - '2 years'::interval * random(),

now() - '2 years'::interval * random()

)

returning *;

id | username | password | created_on | last_logged_on

----+----------+----------+------------+----------------

(0 rows)

This is because, from the point of view of the contractor, insert nothing back – a trigger returned NULL.

I have not yet managed to find a solution to this problem. In their cases I just prefer to get the initial value of the key in advance, using the nextval () and then insert the finished value, so it is already there after insert:

the

$ select nextval('users_id_seq');

nextval

---------

10005

(1 row)

$ insert into users (id, username, password, created_on, last_logged_on)

values (

10005,

'test',

random_string( 20 ),

now() - '2 years'::interval * random(),

now() - '2 years'::interval * random()

);

To all this there is one clarification. Routing every insertion through the trigger slows them down, because for each line of PG will need to perform another “insert".

For urgent bulk inserts best solution is to get them to work directly with the partitions. So, for example, instead of

the

COPY users FROM stdin;

....

\.

you first find out how many IDs you have, for example, in this way:

the

select nextval('users_id_seq') from generate_series(1, 100);

And then issue the appropriate

the

COPY users_p1 FROM stdin;

....

\.

Users_p2 COPY FROM stdin;

....

\.

...

Not the most comfortable way, but it can be useful if you are importing large amounts of data in particioniranje table.

So now you need to understand what States for dividing and how it works. The next question in the header was: why?

Answer it is relatively easy: for performance or ease of maintenance.

As a simple example, let's take the users table, in which rows of 1 billion (1,000,000,000).

Search in it would be progressive to go up even with the index, simply because the depth of the index will increase.

Let's drop all the partition and trigger particioniranja:

the

$ drop table users_1;

$ drop table users_2;

...

$ drop table users_10;

$ drop trigger partition_users on users;

Now in the users table of 10,000 rows. A simple search on a user name takes 0.020 MS is the best time from three attempts.

If I add more rows:

the

$ insert into users (username, password, created_on, last_logged_on)

select

random_string( (random() * 4 + 5)::int4),

random_string( 20 ),

now() - '2 years'::interval * random(),

now() - '2 years'::interval * random()

from

generate_series(1, 100000);

the same search is 0.025 MS. The increase in search time 0.005 MS may be small, but we were still only 110,000 rows, and no other tables, so that the whole table with indexes fits in memory.

Of course, your States for dividing is to be meaningful. For example, if you usually search by user name, it is meaningless to do States for dividing id – Pg will have to search on all partitions (this may become meaningful in the future, but I will tell you that at the end of the article).

That is, you need to decide what you normally request, whether the search for some kind of key or perhaps you are usually looked at only recent data? And partitionierung thus, to limit the number of partitions that Pg need to scan.

It is important that States for dividing makes your life easier, especially if you're more of a DBA than a programmer. Any problem on maintenance (create index, vacuum, pg_reorg/pg_repack, pg_dump) can be efficiently divided into as many subtasks as you have partitions. So instead of one transaction hours to Repack a large table you will have 20 much faster and uses less disk space transaction, and the result will be the same!

Of course, the good news is not limited. In the States for dividing has one big disadvantage: you can't have foreign keys pointing to partitionierung table.

It just doesn't work. You could have foreign keys pointing straight to the partition, but it (usually) is meaningless.

Is this a big problem for you personally depends on your uscaca. It seems to me that in most cases, when we reach the tables large enough order to States for dividing was justified, the application is tested well enough, and we can deal with the lack of foreign key. Besides, we can always add a task in cron to test the presence of "bad" values.

Now we know what States for dividing, how it works and why used. The only question left is: how to convert a table in partitionierung. Usually, the app is not created with partitionierungen tables – in the beginning it does not make sense. But, soon, you will have some table with many rows and you think: "I had to partitionierung it immediately when you create".

But maybe we can still partitionierung when the application is already running? With a minimum of problems?

Let's see. For test I created the database pgbench 97 GB. Most of it, 83 GB, is in the pgbench_accounts table, which contains 666,600,000 records.

Scheme from this table like this:

the

Table "public.pgbench_accounts"

Column | Type | Modifiers

----------+---------------+-----------

aid | integer | not null

bid | integer |

abalance | integer |

filler | character(84) |

Indexes:

"pgbench_accounts_pkey" PRIMARY KEY, btree (aid)

And all requests to it are based on the aid column, which contains values from 1 to 666,600,000.

So let's partitionieren her based on the range of aid values.

Let's say I put in each partition and 10 million rows, then I will need 67 partitions.

But how can I check that my actions will not break? Very simple. I'll run pgbench in a loop. I'm not interested in accurate reporting speeds, enough information about how much my job affects what pgbench does.

With these thoughts I ran the function:

the

$ while true

do

date

pgbench -T 10 -c 2 bench

done 2>&1 | tee pgbench.log

It will run a 10 second test and save the statistics to a file so I can later trace the relationship of the result of my work in the States for dividing.

When everything is ready, I will create the partition with checks in the right places:

the

do $$

declare

i int4;

aid_min INT4;

aid_max INT4;

begin

for i in 1..67

loop

aid_min := (i - 1) * 10000000 + 1;

aid_max := i * 10000000;

execute format('CREATE TABLE pgbench_accounts_p_%s ( like pgbench_accounts including all )', i );

execute format('ALTER TABLE pgbench_accounts_p_%s inherit pgbench_accounts', i);

execute format('ALTER TABLE pgbench_accounts_p_%s add constraint partitioning_check check ( aid > = %s AND aid <= %s )', i, aid_min, aid_max );

end loop;

end;

$$;

the partition is ready, and I can verify that the checks used:

the

$ explain analyze select * from pgbench_accounts where aid = 123;

QUERY PLAN

--------------------------------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.57..16.75 rows=2 width=224) (actual time=6.468..6.473 rows=1 loops=1)

-> Index Scan using pgbench_accounts_pkey on pgbench_accounts (cost=0.57..8.59 rows=1 width=97) (actual time=..6.468 6.469 rows=1 loops=1)

Index Cond: (ID = 123)

-> Index Scan using pgbench_accounts_p_1_pkey on pgbench_accounts_p_1 (cost=0.14..8.16 rows=1 width=352) (actual time=0.004..0.004 rows=0 loops=1)

Index Cond: (ID = 123)

Planning time: 3.475 ms

Execution time: 6.497 ms

(7 rows)

Now you need to add trigger-”router”:

the

$ create function partition_for_accounts() returns trigger as $$

DECLARE

v_parition_name text;

BEGIN

v_parition_name := format( 'pgbench_accounts_p_%s', 1 + ( NOV.aid - 1 ) / 10000000 );

execute 'INSERT INTO' || v_parition_name || ' VALUES ( ($1).* )' USING NEW;

return NULL;

END;

$$ language plpgsql;

$ partition_users create trigger before insert on pgbench_accounts for each row execute procedure partition_for_accounts();

That's nice, but it would only work for svezhesrublennyj lines, and I had 666 million rows in the source table. What to do with it?

I need to move them. It's relatively simple in theory, but there are a couple of pitfalls:

-

the

- in Any case, both rows should not be visible at the same time for any transaction (that is, from the main table and partition). the

- I can not delete all rows and insert them into the partition, because it will block the entire base table, while the move.

The second problem can be mitigated if working with batches of data. But we can't use SQL.

From time to time someone asks about how to split a large transaction into portions and call it with one sql function that will iteratively process the data portion. This approach has one fundamental problem: the function call is the transaction. So all this function does is going to happen in one transaction. So the blocking issue is not solved.

But we can use psql (or ruby, perl, python), moving with each serving only a small number of rows, and thus interlocking the main table for a short time.

In General, a single query will look like this:

the

with x as (delete from only pgbench_accounts where aid between .. and .. returning *)

insert into appropriate_partition select * from x;

I chose the serving size, 1000, is a sufficiently small value, so that the process is not delayed, and large enough for the number of servings was not excessive (666 thousand).

Now let's create the batch file:

the

\pset format unaligned

\pset tuples_only true

\o /tmp/run.batch.migration.sql

SELECT

format(

'with x as (DELETE FROM ONLY pgbench_accounts WHERE aid > = %s AND aid <= %s returning *) INSERT INTO pgbench_accounts_p_%s SELECT * FROM x;',

i,

i + 999,

( i - 1 ) / 10000000 + 1

)

FROM

generate_series( 1, 666600000, 1000 ) i;

\o

When I run it in psql, it created the file /tmp/run.batch.migration.sql, which is quite voluminous (97 GB) because it contains 666,600 requests like these:

the

with x as (DELETE FROM ONLY pgbench_accounts WHERE aid > = 1 AND aid <= 1000 returning *) INSERT INTO pgbench_accounts_p_1 SELECT * FROM x;

with x as (DELETE FROM ONLY pgbench_accounts WHERE aid > = 1001 AND aid <= 2000 returning *) INSERT INTO pgbench_accounts_p_1 SELECT * FROM x;

with x as (DELETE FROM ONLY pgbench_accounts WHERE aid >= 2001 AND aid <= 3000 returning *) INSERT INTO pgbench_accounts_p_1 SELECT * FROM x;

Now, when everything is ready, I can start the process (of course, using "screen" or "tmux", so that nothing is lost, if the ssh connection to the server will break):

the

$ psql -d bench -f /tmp/run.batch.migration.sql

It will take some time. In the case of my test database, the average package is handled for ~ 92мс, and so I have 17 hours of moving data around.

In reality, it only took 7 hours. Well.

At the end of the table pgbench_accounts still weighs ~ 83Гб (I think my disk is not fast enough to cope with pgbench, displacement and vacuum).

But I checked and it seems that all rows are moved to the partition:

the

$ select count(*) from only pgbench_accounts;

count

-------

0

(1 row)

How about the speed of pgbench during the move process?

There were 4 phases:

-

the

- Before the migration. the

- After creating partitions. the

- After you create the trigger. the

- During travel.

Results?

the

phase | min | avg | max

---------+-----------+------------------+-----------

Phase 1 | 28.662223 | 64.0359512839506 | 87.219148

Phase 2 | 21.147816 | 56.2721418360656 | 75.967217

Phase 3 | 23.868018 | 58.6375074477612 | 75.335558

Phase 4 | 5.222364 | 23.6086916565574 | 65.770852

(4 rows)

Yes, all movement slowed. But note, please, that this is a common personal computer with SATA drives, not SSD, is under constant high load – pgbench ran your queries as quickly as I could.

In addition, the slight slowdown was due to the fact that vacuum not too good with the deletions. In my opinion, the result is absolutely acceptable.

At the end I could do:

the

$ truncate only pgbench_accounts;

And then to check if everything is OK:

the

$ select count(*) from pgbench_accounts;

count

-----------

666600000

(1 row)

All this was done without any errors and without interruptions of the "real applications".

In the end I will add that the States for dividing soon (relatively) it gets steeper. Recently we can store partition on different servers. And now work is underway (although it is unlikely this update will appear sooner 9.6) to give the opportunity to conduct a parallel scan that will significantly improve the whole process.

I hope this text will be useful for you.

What other aspects of particioniranja tables in PostgreSQL, would you like to discuss? We will be glad to add the program of the conference PG Day'16 Russia the most interesting topics for you! We have opened sales early bird tickets, hurry to register at the lowest price!

Комментарии

Отправить комментарий